Neural Networks and Function Approximation¶

This notebook introduces the fundamental concepts of using neural networks for function approximation, a core task in scientific machine learning (SciML). We will build up from the basic building block, the perceptron, to a single-layer network and demonstrate its capacity to approximate a simple function, connecting theory to practice using PyTorch.

Part 1: Problem set-up and motivation¶

The 1D Poisson Equation: Our Benchmark Problem¶

We begin with a familiar problem: the one-dimensional Poisson equation on the unit interval $[0, 1]$ with homogeneous Dirichlet boundary conditions:

$$-\frac{d^2u}{dx^2} = f(x), \quad x \in [0, 1]$$

subject to boundary conditions: $$u(0) = 0, \quad u(1) = 0$$

This equation models diverse physical phenomena: heat conduction in a rod, deflection of a loaded beam, or electrostatic potential in one dimension. The function $u(x)$ represents the unknown solution we seek, while $f(x)$ is the prescribed source term.

For our initial exploration, we choose a source term that gives a simple, known solution: $$f(x) = \pi^2 \sin(\pi x)$$

This choice yields the analytical solution: $$u(x) = \sin(\pi x)$$

We can verify this solution by direct substitution. The second derivative of $u(x) = \sin(\pi x)$ is $u''(x) = -\pi^2 \sin(\pi x)$, so: $$-\frac{d^2u}{dx^2} = -(-\pi^2 \sin(\pi x)) = \pi^2 \sin(\pi x) = f(x) \quad \checkmark$$

The boundary conditions are satisfied: $u(0) = \sin(0) = 0$ and $u(1) = \sin(\pi) = 0$ ✓

import numpy as np

import matplotlib.pyplot as plt

import torch

import torch.nn as nn

import torch.optim as optim

import random

SEED = 4321

random.seed(SEED)

np.random.seed(SEED)

torch.manual_seed(SEED)

# Set up plotting style

plt.rcParams['figure.figsize'] = (10, 6)

plt.rcParams['axes.grid'] = True

plt.rcParams['grid.alpha'] = 0.3

The Function Approximation Challenge¶

Consider this question: Can we learn to approximate $u(x) = \sin(\pi x)$ by observing only sparse data points?

Let's explore this with a concrete example.

# Generate sparse training data

n_train = 15

# Training points - sparse and noisy

x_train = np.random.rand(n_train)

x_train[0], x_train[-1] = 0, 1 # Include boundaries

x_train = np.sort(x_train)

# Target function: sin(πx)

def target_func(x):

"""Target function: sin(πx)"""

return np.sin(np.pi * x)

y_train = target_func(x_train) + 0.02 * np.random.randn(n_train) # Add noise

# Dense grid for visualization

x_test = np.linspace(0, 1, 200)

y_exact = target_func(x_test)

# Visualize the problem

plt.figure(figsize=(10, 5))

plt.plot(x_test, y_exact, 'b-', alpha=0.3, linewidth=2, label='True solution')

plt.scatter(x_train, y_train, s=50, c='red', zorder=5, label=f'{n_train} noisy samples')

plt.xlabel('x')

plt.ylabel('u(x)')

plt.legend()

plt.title('The Challenge: Learn u(x) from Sparse, Noisy Data')

plt.show()

print(f"Training with {n_train} noisy data points")

Training with 15 noisy data points

Polynomial Approximation: Why It Fails¶

Let's first try the classical approach - polynomial fitting. We'll see why high-degree polynomials fail on sparse data due to Runge's phenomenon.

from numpy.polynomial import Polynomial

# Try different polynomial degrees

degrees = [5, 10, 14] # n_train - 1 = 14 is the maximum meaningful degree

poly_models = {}

errors = {}

fig, axes = plt.subplots(1, 3, figsize=(15, 5))

for idx, degree in enumerate(degrees):

# Fit polynomial

poly = Polynomial.fit(x_train, y_train, degree)

y_poly = poly(x_test)

poly_models[f'Poly-{degree}'] = y_poly

# Compute errors

train_mse = np.mean((poly(x_train) - y_train)**2)

test_error = np.max(np.abs(y_poly - y_exact))

errors[degree] = {'train_mse': train_mse, 'test_error': test_error}

# Plot

ax = axes[idx]

ax.plot(x_test, y_exact, 'k--', alpha=0.5, linewidth=2, label='True')

ax.plot(x_test, y_poly, 'b-', linewidth=2, label=f'Polynomial')

ax.scatter(x_train, y_train, s=30, c='red', zorder=5, label='Data')

ax.set_xlabel('x')

ax.set_ylabel('u(x)')

ax.set_title(f'Degree {degree}\nTrain MSE: {train_mse:.2e}\nMax Error: {test_error:.2e}')

ax.legend()

ax.set_ylim([-0.5, 1.5])

plt.suptitle('Runge\'s Phenomenon: Higher Degree → Worse Oscillations', fontsize=14)

plt.tight_layout()

plt.show()

# Print error summary

print("Polynomial Approximation Results:")

print("-" * 40)

for degree, err in errors.items():

print(f"Degree {degree:2d}: Train MSE = {err['train_mse']:.4e}, Max Error = {err['test_error']:.4e}")

Polynomial Approximation Results: ---------------------------------------- Degree 5: Train MSE = 1.6237e-04, Max Error = 2.2792e-02 Degree 10: Train MSE = 1.6823e-05, Max Error = 3.0909e-02 Degree 14: Train MSE = 9.1711e-27, Max Error = 8.5812e-01

Key Observation: As polynomial degree increases:

- Training error decreases (eventually reaching zero for degree n-1)

- But test error increases due to wild oscillations between data points

- This is Runge's phenomenon - a fundamental limitation of polynomial interpolation

This motivates the need for alternative approaches like neural networks that can provide smooth approximations without these oscillations.

Traditional Methods: Finite Difference¶

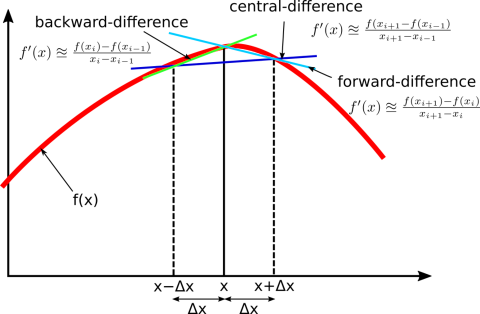

Traditional numerical methods like the Finite Difference Method or Finite Element Method solve PDEs by discretizing the domain into a grid or mesh. They approximate the solution $u(x)$ by finding its values at these specific, discrete points.

For example, the Finite Difference method approximates the second derivative: $$\frac{d^2u}{dx^2} \approx \frac{u_{i+1} - 2u_i + u_{i-1}}{h^2}$$ This transforms the differential equation into a system of algebraic equations for the values $u_i$ at grid points $x_i$. The result is a discrete representation of the solution.

# Simple finite difference solution for comparison

def source_function(x):

"""Source term for Poisson equation: f(x) = π²sin(πx)"""

return np.pi**2 * np.sin(np.pi * x)

def solve_poisson_fd(n_points=101):

"""

Solve 1D Poisson equation: -u'' = f(x) using finite differences

with homogeneous Dirichlet boundary conditions.

"""

# Grid

x_fd = np.linspace(0, 1, n_points)

h = 1.0 / (n_points - 1)

# Tridiagonal matrix for -u'' (central difference)

n_interior = n_points - 2

A = np.zeros((n_interior, n_interior))

np.fill_diagonal(A, 2.0 / h**2)

np.fill_diagonal(A[1:, :], -1.0 / h**2)

np.fill_diagonal(A[:, 1:], -1.0 / h**2)

# Right-hand side (source function at interior points)

f_rhs = source_function(x_fd[1:-1])

# Solve linear system A u_interior = f_rhs

u_interior = np.linalg.solve(A, f_rhs)

# Complete solution with boundary conditions

u_fd = np.zeros(n_points)

u_fd[0] = 0 # u(0) = 0

u_fd[-1] = 0 # u(1) = 0

u_fd[1:-1] = u_interior

return x_fd, u_fd

# Solve using finite differences with different resolutions

x_fd_coarse, u_fd_coarse = solve_poisson_fd(11) # Coarse grid

x_fd_fine, u_fd_fine = solve_poisson_fd(51) # Fine grid

# Create comparison plot

fig, (ax1, ax2) = plt.subplots(1, 2, figsize=(12, 5))

# Coarse grid

ax1.plot(x_test, y_exact, 'b-', linewidth=2, label='Exact')

ax1.plot(x_fd_coarse, u_fd_coarse, 'ro-', markersize=6, label=f'FD (11 points)')

ax1.set_xlabel('x')

ax1.set_ylabel('u(x)')

ax1.set_title('Finite Difference: Coarse Grid')

ax1.legend()

ax1.grid(True, alpha=0.3)

# Fine grid

ax2.plot(x_test, y_exact, 'b-', linewidth=2, label='Exact')

ax2.plot(x_fd_fine, u_fd_fine, 'ro-', markersize=3, label=f'FD (51 points)')

ax2.set_xlabel('x')

ax2.set_ylabel('u(x)')

ax2.set_title('Finite Difference: Fine Grid')

ax2.legend()

ax2.grid(True, alpha=0.3)

plt.suptitle('Traditional Method: Finite Differences')

plt.tight_layout()

plt.show()

# Compute errors

error_coarse = np.max(np.abs(u_fd_coarse - target_func(x_fd_coarse)))

error_fine = np.max(np.abs(u_fd_fine - target_func(x_fd_fine)))

print(f"FD Coarse (11 points): Max error = {error_coarse:.4e}")

print(f"FD Fine (51 points): Max error = {error_fine:.4e}")

print(f"Error reduction factor: {error_coarse/error_fine:.1f}x")

FD Coarse (11 points): Max error = 8.2654e-03 FD Fine (51 points): Max error = 3.2905e-04 Error reduction factor: 25.1x

Part 2: Mathematical Foundations¶

Weierstrass Polynomial Approximation Theorem¶

Weierstrass Approximation Theorem (1885): If $f$ is a continuous function on a closed and bounded interval $[a, b]$, then for any $\varepsilon > 0$, there exists a polynomial $P(x)$ such that

$|f(x) - P(x)| < \varepsilon$

for all $x \in [a, b]$.

What This Means¶

The theorem tells us that any continuous function can be approximated arbitrarily closely by polynomials. No matter how "weird" or complicated a continuous function might be, we can always find a polynomial that gets as close as we want to that function over any closed interval.

This is remarkable because:

- Polynomials are among the simplest functions to work with computationally

- They can be differentiated and integrated easily

- Yet they're dense in the space of continuous functions

Historical Context¶

Karl Weierstrass proved this theorem in 1885, though the result was actually known to him since 1872. It was groundbreaking because it showed that despite polynomials seeming "simple," they could approximate any continuous behavior.

Constructive Proof: Bernstein Polynomials¶

One elegant way to prove this theorem constructively is using Bernstein polynomials. For a function $f$ on $[0,1]$, the $n$-th Bernstein polynomial is:

$B_n(x) = \sum_{k=0}^n f(k/n) \cdot \binom{n}{k} \cdot x^k \cdot (1-x)^{n-k}$

where $\binom{n}{k}$ is the binomial coefficient. As $n \to \infty$, $B_n(x)$ converges uniformly to $f(x)$.

Key Insight¶

The theorem bridges the gap between the abstract world of arbitrary continuous functions and the concrete, computational world of polynomials. It guarantees that we never need to work with functions more complicated than polynomials when dealing with continuous phenomena on bounded intervals.

# Convergence analysis: Error vs Polynomial Degree

from scipy.special import comb

def bernstein_polynomial(f, n, x):

"""Compute n-th Bernstein polynomial approximation"""

result = np.zeros_like(x)

for k in range(n + 1):

basis = comb(n, k) * (x ** k) * ((1 - x) ** (n - k))

result += f(k/n) * basis

return result

# Test convergence

#x_test_bern = np.linspace(0, 1, 1000)

#y_true_bern = target_func(x_test_bern)

degrees = [5, 10, 20, 50, 100, 200]

errors = []

for n in degrees:

y_approx = bernstein_polynomial(target_func, n, x_train)

max_error = np.max(np.abs(y_train - y_approx))

errors.append(max_error)

# Theoretical rate: O(1/sqrt(n))

theoretical = 1.0 / np.sqrt(degrees)

plt.figure(figsize=(10, 6))

plt.loglog(degrees, errors, 'bo-', label='Bernstein Approximation', linewidth=2, markersize=8)

plt.loglog(degrees, theoretical, 'r--', label='O(1/√n) theoretical', linewidth=2)

plt.xlabel('Polynomial Degree n')

plt.ylabel('Maximum Error')

plt.title('Bernstein Polynomial Convergence')

plt.legend()

plt.grid(True, which="both", ls="-", alpha=0.2)

plt.show()

print(f"Convergence rate: {np.polyfit(np.log(degrees[-3:]), np.log(errors[-3:]), 1)[0]:.2f}")

print("(Theoretical: -0.5 for O(1/√n))")

Convergence rate: -0.04 (Theoretical: -0.5 for O(1/√n))

The theoretical convergence rate is $O(1/\sqrt{n})$. The observed convergence is slow but guaranteed to reach zero. This demonstrates the Weierstrass Approximation Theorem: any continuous function on [0,1] can be uniformly approximated by polynomials to arbitrary precision.

Why This Proves the Weierstrass Theorem¶

The Bernstein polynomials provide a constructive proof of the Weierstrass Approximation Theorem:

Guaranteed Convergence: For any continuous function $f$ on $[0,1]$, the Bernstein polynomials $B_n(f;x)$ converge uniformly to $f$ as $n \to \infty$

Explicit Construction: Unlike existence proofs, Bernstein gives us an explicit formula: $$B_n(f; x) = \sum_{k=0}^{n} f\left(\frac{k}{n}\right) \binom{n}{k} x^k (1-x)^{n-k}$$

Convergence Rate: For functions with modulus of continuity $\omega(f; \delta)$, the error satisfies: $$|f(x) - B_n(f; x)| \leq \frac{3}{2} \omega\left(f; \frac{1}{\sqrt{n}}\right)$$

Key Properties:

- Positivity: If $f \geq 0$, then $B_n(f) \geq 0$

- Monotonicity: If $f$ is increasing, so is $B_n(f)$

- End-point interpolation: $B_n(f; 0) = f(0)$ and $B_n(f; 1) = f(1)$

This demonstrates that any continuous function can be approximated arbitrarily well by polynomials - the foundation for neural networks' universal approximation capabilities!

From Polynomials to Neural Networks: The Weierstrass Approximation Theorem¶

Before diving into the Universal Approximation Theorem for neural networks, let's explore its historical predecessor: the Weierstrass Approximation Theorem (1885). This foundational result shows that polynomials can approximate any continuous function, providing the mathematical intuition for why neural networks work.

The Weierstrass Approximation Theorem¶

Theorem (Weierstrass, 1885): Every continuous function on a closed interval $[a, b]$ can be uniformly approximated as closely as desired by a polynomial function.

Formally: For any continuous function $f: [a, b] \to \mathbb{R}$ and any $\epsilon > 0$, there exists a polynomial $p(x)$ such that:

$$\sup_{x \in [a,b]} |f(x) - p(x)| < \epsilon$$

This theorem tells us that the set of polynomials is dense in the space of continuous functions under the uniform norm.

Why This Matters for Neural Networks¶

The Weierstrass theorem establishes a crucial principle: simple building blocks (monomials $x^n$) can approximate arbitrarily complex continuous functions. Neural networks follow the same principle but with different building blocks:

- Polynomials: Build from monomials $(1, x, x^2, x^3, ...)$

- Neural Networks: Build from activation functions (ReLU, sigmoid, tanh, ...)

Both achieve universal approximation, but neural networks often do it more efficiently!

import numpy as np

import matplotlib.pyplot as plt

from numpy.polynomial import Polynomial

def approximate_with_polynomial(func, x_range, degree, title="Polynomial Approximation"):

"""Approximate a function using polynomial regression (least squares)"""

x = np.linspace(x_range[0], x_range[1], 100)

y = func(x)

# Fit polynomial using numpy

poly = Polynomial.fit(x, y, degree)

y_approx = poly(x)

# Calculate approximation error

error = np.max(np.abs(y - y_approx))

return poly, error

# Target function: sin(πx)

target_func = lambda x: np.sin(np.pi * x)

print("Weierstrass Approximation of sin(πx) with Polynomials")

print("=" * 60)

# Show increasing polynomial degrees

degrees = [3, 5, 9]

colors = ['red', 'green', 'orange']

errors = []

# Create single figure with two subplots

plt.figure(figsize=(15, 6))

# First subplot: All approximations together

plt.subplot(1, 2, 1)

# Plot target function first

x = np.linspace(0, 1, 100)

y = target_func(x)

plt.plot(x, y, 'b-', linewidth=3, label='Target: $\\sin(\\pi x)$')

# Plot approximations for each degree

for i, degree in enumerate(degrees):

poly, error = approximate_with_polynomial(

target_func, [0, 1], degree,

f"Polynomial Approximation (Degree {degree})"

)

# Get approximation data

x = np.linspace(0, 1, 100)

y = target_func(x)

y_approx = poly(x)

errors.append(error)

# Plot approximation with different color

plt.plot(x, y_approx, '--', color=colors[i], linewidth=2,

label=f'Degree {degree} (error: {error:.4f})')

print(f"Degree {degree:2d}: Max error = {error:.6f}")

# Print polynomial coefficients

coeffs = poly.coef

print(f" Polynomial: p(x) = ", end="")

terms = []

for j, coef in enumerate(coeffs):

if abs(coef) > 1e-10: # Skip near-zero coefficients

if j == 0:

terms.append(f"{coef:.3f}")

elif j == 1:

terms.append(f"{coef:+.3f}x")

else:

terms.append(f"{coef:+.3f}x^{j}")

print(" ".join(terms[:4]) + " ...") # Show first few terms

print()

plt.xlabel('x')

plt.ylabel('y')

plt.title('Polynomial Approximations of $\\sin(\\pi x)$')

plt.legend()

plt.grid(True, alpha=0.3)

# Second subplot: All errors together

plt.subplot(1, 2, 2)

for i, degree in enumerate(degrees):

poly, error = approximate_with_polynomial(

target_func, [0, 1], degree,

f"Polynomial Approximation (Degree {degree})"

)

# Get error data

x = np.linspace(0, 1, 100)

y = target_func(x)

y_approx = poly(x)

# Plot error with same color as approximation

plt.plot(x, np.abs(y - y_approx), '-', color=colors[i], linewidth=2,

label=f'Degree {degree}')

plt.xlabel('x')

plt.ylabel('|Error|')

plt.title('Approximation Errors Comparison')

plt.legend()

plt.grid(True, alpha=0.3)

plt.yscale('log') # Log scale for better visualization

plt.tight_layout()

plt.show()

Weierstrass Approximation of sin(πx) with Polynomials ============================================================ Degree 3: Max error = 0.047466 Polynomial: p(x) = 0.979 -1.027x^2 ... Degree 5: Max error = 0.001126 Polynomial: p(x) = 1.000 -1.224x^2 +0.226x^4 ... Degree 9: Max error = 0.000000 Polynomial: p(x) = 1.000 -1.234x^2 +0.254x^4 -0.021x^6 ...

Convergence Analysis¶

As we increase the polynomial degree, the approximation improves:

# Plot convergence

plt.figure(figsize=(10, 4))

plt.subplot(1, 2, 1)

plt.plot(degrees, errors, 'bo-', linewidth=2, markersize=8)

plt.xlabel('Polynomial Degree')

plt.ylabel('Maximum Approximation Error')

plt.title('Weierstrass Convergence')

plt.grid(True, alpha=0.3)

plt.yscale('log')

plt.subplot(1, 2, 2)

# Compare different degrees visually

x = np.linspace(0, 1, 200)

y_true = target_func(x)

plt.plot(x, y_true, 'k-', linewidth=3, label='sin(πx)', alpha=0.8)

for i, degree in enumerate([3, 5, 9]):

poly = Polynomial.fit(np.linspace(0, 1, 100),

target_func(np.linspace(0, 1, 100)), degree)

plt.plot(x, poly(x), '--', linewidth=2, label=f'Degree {degree}', alpha=0.7)

plt.xlabel('x')

plt.ylabel('y')

plt.title('Multiple Polynomial Approximations')

plt.legend()

plt.grid(True, alpha=0.3)

plt.ylim(-1.5, 1.5)

plt.tight_layout()

plt.show()

Key Observations: 1. Higher degree polynomials provide better approximation 2. Error decreases exponentially with polynomial degree 3. But high-degree polynomials can be unstable (Runge's phenomenon)

Bernstein Polynomials: A Constructive Proof¶

One elegant proof of the Weierstrass theorem uses Bernstein polynomials, which provide an explicit construction:

$$B_n(f; x) = \sum_{k=0}^{n} f\left(\frac{k}{n}\right) \binom{n}{k} x^k (1-x)^{n-k}$$

These polynomials converge uniformly to $f$ as $n \to \infty$.

def bernstein_polynomial(f, n, x):

"""Compute n-th Bernstein polynomial approximation"""

from scipy.special import comb

result = np.zeros_like(x)

for k in range(n + 1):

# Bernstein basis polynomial

basis = comb(n, k) * (x ** k) * ((1 - x) ** (n - k))

# Weight by function value at k/n

result += f(k / n) * basis

return result

# Demonstrate Bernstein approximation

plt.figure(figsize=(12, 4))

x = np.linspace(0, 1, 200)

y_true = target_func(x)

degrees = [5, 10, 20, 40]

for i, n in enumerate(degrees):

plt.subplot(1, 4, i + 1)

y_bernstein = bernstein_polynomial(target_func, n, x)

plt.plot(x, y_true, 'b-', linewidth=2, label='sin(πx)', alpha=0.7)

plt.plot(x, y_bernstein, 'r--', linewidth=2, label=f'Bernstein n={n}')

error = np.max(np.abs(y_true - y_bernstein))

plt.title(f'n = {n}\nError: {error:.4f}')

plt.xlabel('x')

if i == 0:

plt.ylabel('y')

plt.legend(loc='upper right', fontsize=8)

plt.grid(True, alpha=0.3)

plt.ylim(-1.2, 1.2)

plt.suptitle('Bernstein Polynomial Approximation', fontsize=14, fontweight='bold')

plt.tight_layout()

plt.show()

Polynomials vs Neural Networks: A Direct Comparison¶

Let's compare polynomial approximation with neural network approximation for the same function:

import torch

import torch.nn as nn

import torch.optim as optim

# Simple neural network

class SimpleNN(nn.Module):

def __init__(self, hidden_size):

super().__init__()

self.net = nn.Sequential(

nn.Linear(1, hidden_size),

nn.Sigmoid(),

nn.Linear(hidden_size, 1)

)

def forward(self, x):

return self.net(x)

# Train neural network on the SAME sparse data as polynomial

def train_nn(x_train_data, y_train_data, hidden_size=20, epochs=5000):

# Convert numpy arrays to torch tensors

x_train_torch = torch.tensor(x_train_data.reshape(-1, 1), dtype=torch.float32)

y_train_torch = torch.tensor(y_train_data.reshape(-1, 1), dtype=torch.float32)

model = SimpleNN(hidden_size)

optimizer = optim.Adam(model.parameters(), lr=0.1)

criterion = nn.MSELoss()

# Train with early stopping

best_loss = float('inf')

patience_counter = 0

for epoch in range(epochs):

optimizer.zero_grad()

y_pred = model(x_train_torch)

loss = criterion(y_pred, y_train_torch)

loss.backward()

optimizer.step()

# Early stopping

if loss.item() < best_loss:

best_loss = loss.item()

patience_counter = 0

else:

patience_counter += 1

if patience_counter > 500 or loss.item() < 1e-6:

break

return model

# Compare polynomial vs neural network - both trained on SAME 15 noisy points

plt.figure(figsize=(15, 5))

# Use the same test grid for evaluation

x_test = np.linspace(0, 1, 200)

y_true = target_func(x_test)

# Polynomial approximation on sparse data

poly_degree = 10

poly = Polynomial.fit(x_train, y_train, poly_degree) # Use our 15 sparse points

y_poly = poly(x_test)

poly_params = poly_degree + 1

# Neural network approximation on same sparse data

nn_hidden = 10

model = train_nn(x_train, y_train, nn_hidden, epochs=10000) # Use same 15 points

x_test_torch = torch.tensor(x_test.reshape(-1, 1), dtype=torch.float32)

with torch.no_grad():

y_nn = model(x_test_torch).numpy().flatten()

nn_params = 1 * nn_hidden + nn_hidden + nn_hidden * 1 + 1 # weights + biases

# Plot comparison

plt.subplot(1, 3, 1)

plt.plot(x_test, y_true, 'k-', linewidth=3, label='Target', alpha=0.8)

plt.plot(x_test, y_poly, 'b--', linewidth=2, label=f'Polynomial (deg {poly_degree})')

plt.plot(x_test, y_nn, 'r:', linewidth=2, label=f'Neural Net ({nn_hidden} hidden)')

plt.scatter(x_train, y_train, color='black', s=30, zorder=5, alpha=0.5, label='Training Data')

plt.xlabel('x')

plt.ylabel('y')

plt.title('Function Approximation\n(Both trained on 15 sparse points)')

plt.legend()

plt.grid(True, alpha=0.3)

plt.subplot(1, 3, 2)

plt.plot(x_test, np.abs(y_true - y_poly), 'b-', linewidth=2,

label=f'Polynomial ({poly_params} params)')

plt.plot(x_test, np.abs(y_true - y_nn), 'r-', linewidth=2,

label=f'Neural Net ({nn_params} params)')

plt.xlabel('x')

plt.ylabel('|Error|')

plt.title('Approximation Error')

plt.legend()

plt.grid(True, alpha=0.3)

plt.yscale('log')

plt.subplot(1, 3, 3)

errors_comparison = {

'Polynomial\n(11 params)': np.max(np.abs(y_true - y_poly)),

'Neural Net\n(21 params)': np.max(np.abs(y_true - y_nn))

}

bars = plt.bar(errors_comparison.keys(), errors_comparison.values(),

color=['blue', 'red'], alpha=0.7)

plt.ylabel('Maximum Error')

plt.title('Error Comparison')

for bar, error in zip(bars, errors_comparison.values()):

plt.text(bar.get_x() + bar.get_width()/2, bar.get_height() + 0.0001,

f'{error:.4f}', ha='center', fontsize=10)

plt.grid(True, alpha=0.3, axis='y')

plt.suptitle('Fair Comparison: Polynomial vs Neural Network (Both Trained on 15 Sparse Points)',

fontsize=14, fontweight='bold')

plt.tight_layout()

plt.show()

print(f"\nComparison Summary (both trained on {n_train} sparse noisy points):")

print(f"Polynomial (degree {poly_degree}):")

print(f" Parameters: {poly_params}")

print(f" Max Error: {np.max(np.abs(y_true - y_poly)):.6f}")

print(f" Train MSE: {np.mean((poly(x_train) - y_train)**2):.6f}")

print(f"\nNeural Network ({nn_hidden} hidden units):")

print(f" Parameters: {nn_params}")

print(f" Max Error: {np.max(np.abs(y_true - y_nn)):.6f}")

# Calculate train MSE for NN

x_train_torch_eval = torch.tensor(x_train.reshape(-1, 1), dtype=torch.float32)

with torch.no_grad():

y_train_pred = model(x_train_torch_eval).numpy().flatten()

print(f" Train MSE: {np.mean((y_train_pred - y_train)**2):.6f}")

print(f"\nKey Observation: NN provides smoother interpolation than polynomial on sparse data!")

Comparison Summary (both trained on 15 sparse noisy points): Polynomial (degree 10): Parameters: 11 Max Error: 0.030909 Train MSE: 0.000017 Neural Network (10 hidden units): Parameters: 31 Max Error: 0.047988 Train MSE: 0.000186 Key Observation: NN provides smoother interpolation than polynomial on sparse data!

Limitations of Polynomial Approximation¶

While polynomials can theoretically approximate any continuous function, they have practical limitations:

- Runge's Phenomenon: High-degree polynomials oscillate wildly at boundaries

- Global Support: Changing one coefficient affects the entire function

- Computational Instability: High-degree polynomials suffer from numerical issues

- Poor Extrapolation: Polynomials diverge rapidly outside the training interval

Neural networks address these limitations:

- Local Support: ReLU networks create piecewise linear approximations

- Stability: Bounded activations (sigmoid, tanh) prevent divergence

- Compositionality: Deep networks build complex functions from simple pieces

- Adaptivity: Networks learn where to place their "basis functions"

From Weierstrass to Universal Approximation¶

The progression from Weierstrass to neural networks represents a evolution in approximation theory:

- 1885 - Weierstrass: Polynomials are universal approximators

- 1989 - Cybenko: Single-layer neural networks are universal approximators

- Modern Deep Learning: Deep networks are exponentially more efficient

This historical perspective shows that neural networks are not magical – they're the latest chapter in a long mathematical story about approximating complex functions with simple building blocks!

Part 3: The Neural Network Approach: Function Approximation¶

Our goal is to train a neural network $u_{NN}(x; \theta)$ to approximate the continuous solution $u^*(x) = \sin(\pi x)$ over the interval $[0, 1]$. This is a function approximation problem.

The theoretical foundation for this approach is the Universal Approximation Theorem (UAT), which guarantees that neural networks can approximate any continuous function to arbitrary accuracy with enough neurons. We'll explore UAT in detail later in this notebook.

Traditional Numerical Method vs Neural Network: Discrete vs Continuous¶

Traditional numerical methods compute discrete values at grid points. In contrast, the Neural Network approach learns a continuous function $u_{NN}(x; \theta)$ that approximates the true solution $u^*(x)$ over the entire domain.

- This function is parameterized by the network's weights and biases $\theta$

- We train by adjusting $\theta$ so the network's output matches training data

- Once trained, we can evaluate the solution at any point, not just grid points

The Perceptron: Building Block of Neural Networks¶

A perceptron is a linear transformation followed by an activation function: $$\hat{y} = g(\mathbf{w}^T\mathbf{x} + b)$$

We'll start with the simplest case: no activation (linear perceptron), implement training from scratch, then add nonlinearity.

import numpy as np

import matplotlib.pyplot as plt

# Generate linearly separable data

np.random.seed(42)

n = 50

# Two classes

X0 = np.random.randn(n, 2) * 0.5 + [-2, -2]

X1 = np.random.randn(n, 2) * 0.5 + [2, 2]

X = np.vstack([X0, X1])

y = np.hstack([np.zeros(n), np.ones(n)])

# Visualize just the data

plt.figure(figsize=(8, 6))

plt.scatter(X0[:, 0], X0[:, 1], c='blue', s=50, alpha=0.7, label='Class 0', edgecolors='darkblue')

plt.scatter(X1[:, 0], X1[:, 1], c='red', s=50, alpha=0.7, label='Class 1', edgecolors='darkred')

plt.xlabel('$x_1$', fontsize=12)

plt.ylabel('$x_2$', fontsize=12)

plt.title('Linearly Separable Data', fontsize=14)

plt.legend(fontsize=11)

plt.grid(True, alpha=0.3)

plt.axis('equal')

plt.tight_layout()

plt.show()

# Print some basic statistics about the data

print(f"Total samples: {len(X)}")

print(f"Class 0: {len(X0)} samples")

print(f"Class 1: {len(X1)} samples")

print(f"\nClass 0 center: [{X0.mean(axis=0)[0]:.2f}, {X0.mean(axis=0)[1]:.2f}]")

print(f"Class 1 center: [{X1.mean(axis=0)[0]:.2f}, {X1.mean(axis=0)[1]:.2f}]")

Total samples: 100 Class 0: 50 samples Class 1: 50 samples Class 0 center: [-2.07, -2.04] Class 1 center: [1.95, 2.07]

Linear Perceptron in NumPy¶

First, a pure linear model: $\hat{y} = \mathbf{w}^T\mathbf{x} + b$

import numpy as np

import matplotlib.pyplot as plt

class LinearPerceptron:

def __init__(self, dim):

self.w = np.random.randn(dim) * 0.01

self.b = 0.0

def forward(self, x):

"""Compute output: y = w^T x + b"""

return np.dot(x, self.w) + self.b

def predict_batch(self, X):

"""Predict for multiple samples"""

return X @ self.w + self.b

Training: Gradient Descent from Scratch¶

To train, we minimize the mean squared error loss: $$L = \frac{1}{2N}\sum_{i=1}^N (y_i - \hat{y}_i)^2$$

Using calculus, we derive the gradients:

For a single sample with prediction $\hat{y} = \mathbf{w}^T\mathbf{x} + b$ and loss $L = \frac{1}{2}(y - \hat{y})^2$:

Gradient w.r.t weights: $$\frac{\partial L}{\partial \mathbf{w}} = \frac{\partial L}{\partial \hat{y}} \cdot \frac{\partial \hat{y}}{\partial \mathbf{w}} = -(y - \hat{y}) \cdot \mathbf{x}$$

Gradient w.r.t bias: $$\frac{\partial L}{\partial b} = \frac{\partial L}{\partial \hat{y}} \cdot \frac{\partial \hat{y}}{\partial b} = -(y - \hat{y})$$

The update rule: $$\mathbf{w} \leftarrow \mathbf{w} + \eta(y - \hat{y})\mathbf{x}$$ $$b \leftarrow b + \eta(y - \hat{y})$$

where $\eta$ is the learning rate.

def train_linear_perceptron(model, X, y, lr=0.01, epochs=100):

"""Train using gradient descent"""

losses = []

for epoch in range(epochs):

# Forward pass for all samples

y_pred = model.predict_batch(X)

# Compute loss

loss = 0.5 * np.mean((y - y_pred)**2)

losses.append(loss)

# Compute gradients (vectorized)

error = y_pred - y # Note: gradient of MSE

grad_w = X.T @ error / len(X)

grad_b = np.mean(error)

# Update parameters

model.w -= lr * grad_w

model.b -= lr * grad_b

if (epoch + 1) % 25 == 0:

print(f"Epoch {epoch+1}: loss={loss:.4f}")

return losses

Example: Linear Classification¶

# Generate linearly separable data

np.random.seed(42)

n = 50

# Two classes

X0 = np.random.randn(n, 2) * 0.5 + [-2, -2]

X1 = np.random.randn(n, 2) * 0.5 + [2, 2]

X = np.vstack([X0, X1])

y = np.hstack([np.zeros(n), np.ones(n)])

# Train linear perceptron

model = LinearPerceptron(2)

losses = train_linear_perceptron(model, X, y, lr=0.1, epochs=100)

# Visualize

fig, (ax1, ax2) = plt.subplots(1, 2, figsize=(10, 4))

# Loss curve

ax1.plot(losses)

ax1.set_xlabel('Epoch')

ax1.set_ylabel('MSE Loss')

ax1.set_yscale('log')

ax1.grid(True, alpha=0.3)

# Decision boundary

xx, yy = np.meshgrid(np.linspace(-4, 4, 100), np.linspace(-4, 4, 100))

Z = model.predict_batch(np.c_[xx.ravel(), yy.ravel()]).reshape(xx.shape)

ax2.contour(xx, yy, Z, levels=[0.5], colors='g', linewidths=2)

ax2.contourf(xx, yy, Z, levels=[-1, 0.5, 2], alpha=0.3, colors=['blue', 'red'])

ax2.scatter(X0[:,0], X0[:,1], c='b', s=30, label='Class 0')

ax2.scatter(X1[:,0], X1[:,1], c='r', s=30, label='Class 1')

ax2.set_xlabel('$x_1$')

ax2.set_ylabel('$x_2$')

ax2.legend()

ax2.grid(True, alpha=0.3)

plt.tight_layout()

plt.show()

print(f"\nLearned parameters: w={model.w}, b={model.b:.3f}")

Epoch 25: loss=0.0041 Epoch 50: loss=0.0032 Epoch 75: loss=0.0032 Epoch 100: loss=0.0032

Learned parameters: w=[0.13149522 0.10857732], b=0.506

Limitation: Non-linear Patterns¶

Linear perceptrons fail on non-linearly separable data:

# Generate XOR-like pattern (not linearly separable)

# Similar to the data in relu.md visualization

np.random.seed(42)

# Class 0 - diagonal pattern (magenta points)

class0_X = np.array([

[-2.75, 0.27], [-3.63, 1.20], [-2.51, 1.95], [-1.85, 3.02],

[-0.81, 2.54], [0.03, 3.28], [1.82, 3.23], [3.37, 2.48],

[4.76, 1.96], [4.74, 0.82], [3.22, 1.02], [0.38, 1.22],

[-0.62, -0.04], [0.52, -0.44], [1.72, -0.31], [2.29, -1.63],

[0.87, -1.84], [-0.87, -1.52]

])

# Class 1 - upper band pattern (gold points)

class1_X = np.array([

[-5.33, 2.15], [-4.88, 3.79], [-3.99, 3.16], [-2.98, 4.30],

[-1.91, 6.07], [-1.06, 4.89], [0.78, 5.01], [-0.22, 6.47],

[1.43, 6.11], [2.98, 4.41], [4.50, 3.61], [5.13, 4.95],

[6.37, 3.01]

])

# Add some noise and additional points to make it more challenging

noise_scale = 0.3

class0_X += np.random.normal(0, noise_scale, class0_X.shape)

class1_X += np.random.normal(0, noise_scale, class1_X.shape)

# Combine data

X_nonlinear = np.vstack([class0_X, class1_X])

y_nonlinear = np.hstack([np.zeros(len(class0_X)), np.ones(len(class1_X))])

# Normalize features for better training

X_mean = X_nonlinear.mean(axis=0)

X_std = X_nonlinear.std(axis=0)

X_nonlinear_norm = (X_nonlinear - X_mean) / X_std

# Try to fit with linear perceptron

model_linear = LinearPerceptron(2)

losses_linear = train_linear_perceptron(model_linear, X_nonlinear_norm, y_nonlinear, lr=0.1, epochs=100)

# Visualize failure of linear model

plt.figure(figsize=(15, 5))

# Plot 1: Loss curve

plt.subplot(1, 3, 1)

plt.plot(losses_linear, 'b-', linewidth=2)

plt.xlabel('Epoch')

plt.ylabel('Loss')

plt.title('Loss Not Converging to Zero')

plt.grid(True, alpha=0.3)

plt.ylim(0, max(losses_linear) * 1.1)

# Plot 2: Original space with linear boundary

plt.subplot(1, 3, 2)

# Create mesh for decision boundary

xx, yy = np.meshgrid(

np.linspace(X_nonlinear[:,0].min()-1, X_nonlinear[:,0].max()+1, 100),

np.linspace(X_nonlinear[:,1].min()-1, X_nonlinear[:,1].max()+1, 100)

)

# Normalize mesh points

mesh_norm = (np.c_[xx.ravel(), yy.ravel()] - X_mean) / X_std

Z_linear = model_linear.predict_batch(mesh_norm).reshape(xx.shape)

plt.contour(xx, yy, Z_linear, levels=[0.5], colors='g', linewidths=2, linestyles='--')

plt.scatter(class0_X[:,0], class0_X[:,1], c='magenta', s=50, edgecolor='purple',

linewidth=1.5, label='Class 0', alpha=0.7)

plt.scatter(class1_X[:,0], class1_X[:,1], c='gold', s=50, edgecolor='orange',

linewidth=1.5, label='Class 1', alpha=0.7)

plt.xlabel('$x_1$')

plt.ylabel('$x_2$')

plt.title('Linear Boundary Fails')

plt.legend()

plt.grid(True, alpha=0.3)

# Plot 3: Accuracy over epochs

plt.subplot(1, 3, 3)

# Calculate accuracy at each epoch

accuracies = []

model_temp = LinearPerceptron(2)

for epoch in range(100):

train_linear_perceptron(model_temp, X_nonlinear_norm, y_nonlinear, lr=0.1, epochs=1)

predictions = (model_temp.predict_batch(X_nonlinear_norm) > 0.5).astype(int)

accuracy = np.mean(predictions == y_nonlinear)

accuracies.append(accuracy)

plt.plot(accuracies, 'r-', linewidth=2)

plt.axhline(y=1.0, color='g', linestyle=':', alpha=0.5, label='Perfect accuracy')

plt.axhline(y=0.5, color='gray', linestyle=':', alpha=0.5, label='Random guess')

plt.xlabel('Epoch')

plt.ylabel('Accuracy')

plt.title('Classification Accuracy')

plt.legend()

plt.grid(True, alpha=0.3)

plt.ylim(0, 1.05)

plt.suptitle('Linear Perceptron Cannot Solve Non-Linearly Separable Data', fontsize=14, fontweight='bold')

plt.tight_layout()

plt.show()

print(f"Final loss: {losses_linear[-1]:.4f}")

print(f"Final accuracy: {accuracies[-1]:.2%}")

Epoch 25: loss=0.0531 Epoch 50: loss=0.0520 Epoch 75: loss=0.0520 Epoch 100: loss=0.0520

Final loss: 0.0520 Final accuracy: 87.10%

Solution: Adding Non-linear Activation¶

The linear perceptron fails because the data is not linearly separable. By adding a hidden layer with non-linear activation (ReLU), we can transform the input space into a feature space where the data becomes linearly separable.

Interactive Demo: Visualizing Nonlinear Transformation¶

This interactive demo illustrates how a combination of linear transformation and non-linearity can transform data in a way that linear transformations alone cannot. Observe how the data, initially not linearly separable in the input space (X), becomes separable after passing through a linear layer (Y) and then a non-linear activation (Z).

This provides intuition for why layers with non-linear activations are powerful: they can map data into a new space where complex patterns become simpler (potentially linearly separable), making them learnable by subsequent layers.

# Two-layer network with just 2 hidden units (matching relu.md solution)

class TwoNeuronNetwork:

def __init__(self):

# Initialize with rotation-like pattern (based on relu.md solution: -59 degrees, scale 2)

angle = -59 * np.pi / 180

scale = 2.0

# First layer: linear transformation (rotation + scaling)

self.W1 = np.array([

[np.cos(angle) * scale, -np.sin(angle) * scale],

[np.sin(angle) * scale, np.cos(angle) * scale]

]).T # Transpose to match input @ W1 convention

self.b1 = np.zeros(2)

# Output layer: combine the two ReLU features

self.W2 = np.random.randn(2, 1) * 0.1

self.b2 = np.zeros(1)

def relu(self, x):

return np.maximum(0, x)

def relu_derivative(self, x):

return (x > 0).astype(float)

def sigmoid(self, x):

return 1 / (1 + np.exp(-np.clip(x, -500, 500)))

def forward(self, X):

# First layer: linear transformation

self.z1 = X @ self.W1 + self.b1

# Apply ReLU activation

self.a1 = self.relu(self.z1)

# Output layer

self.z2 = self.a1 @ self.W2 + self.b2

self.a2 = self.sigmoid(self.z2)

return self.a2

def train(self, X, y, epochs=500, lr=0.5):

losses = []

for epoch in range(epochs):

# Forward pass

output = self.forward(X)

# Compute loss

eps = 1e-7

output_clipped = np.clip(output.flatten(), eps, 1 - eps)

loss = -np.mean(y * np.log(output_clipped) +

(1 - y) * np.log(1 - output_clipped))

losses.append(loss)

# Backward pass

m = len(X)

# Output layer gradients

dz2 = self.a2 - y.reshape(-1, 1)

dW2 = (self.a1.T @ dz2) / m

db2 = np.mean(dz2, axis=0)

# Only update output layer (keep transformation fixed for demonstration)

self.W2 -= lr * dW2

self.b2 -= lr * db2

# Optional: also train first layer after initial epochs

if epoch > 100:

da1 = dz2 @ self.W2.T

dz1 = da1 * self.relu_derivative(self.z1)

dW1 = (X.T @ dz1) / m

db1 = np.mean(dz1, axis=0)

self.W1 -= lr * 0.01 * dW1 # Small learning rate for first layer

self.b1 -= lr * 0.01 * db1

return losses

# Train the two-neuron network

np.random.seed(42)

model_twoneuron = TwoNeuronNetwork()

losses_twoneuron = model_twoneuron.train(X_nonlinear_norm, y_nonlinear, epochs=500, lr=1.0)

# Also train a properly initialized multi-neuron network for comparison

class OptimizedNetwork:

def __init__(self, input_dim=2, hidden_dim=4):

# Better initialization

self.W1 = np.random.randn(input_dim, hidden_dim) * 0.5

self.b1 = np.random.randn(hidden_dim) * 0.1

self.W2 = np.random.randn(hidden_dim, 1) * 0.5

self.b2 = np.zeros(1)

def relu(self, x):

return np.maximum(0, x)

def sigmoid(self, x):

return 1 / (1 + np.exp(-np.clip(x, -500, 500)))

def forward(self, X):

self.z1 = X @ self.W1 + self.b1

self.a1 = self.relu(self.z1)

self.z2 = self.a1 @ self.W2 + self.b2

return self.sigmoid(self.z2)

def train(self, X, y, epochs=500, lr=0.5):

losses = []

for epoch in range(epochs):

# Forward

output = self.forward(X)

# Loss

eps = 1e-7

output_clipped = np.clip(output.flatten(), eps, 1 - eps)

loss = -np.mean(y * np.log(output_clipped) +

(1 - y) * np.log(1 - output_clipped))

losses.append(loss)

# Backward

m = len(X)

dz2 = output - y.reshape(-1, 1)

dW2 = (self.a1.T @ dz2) / m

db2 = np.mean(dz2, axis=0)

da1 = dz2 @ self.W2.T

dz1 = da1 * (self.z1 > 0)

dW1 = (X.T @ dz1) / m

db1 = np.mean(dz1, axis=0)

# Update with momentum

self.W2 -= lr * dW2

self.b2 -= lr * db2

self.W1 -= lr * dW1

self.b1 -= lr * db1

return losses

model_optimized = OptimizedNetwork(2, 4)

losses_optimized = model_optimized.train(X_nonlinear_norm, y_nonlinear, epochs=500, lr=0.5)

# Visualize all three models

plt.figure(figsize=(15, 10))

# Row 1: Loss curves

plt.subplot(2, 3, 1)

plt.plot(losses_linear, 'b-', label='Linear', alpha=0.7)

plt.plot(losses_twoneuron, 'g-', label='2 Hidden Units', alpha=0.7)

plt.plot(losses_optimized, 'r-', label='4 Hidden Units', alpha=0.7)

plt.xlabel('Epoch')

plt.ylabel('Loss')

plt.title('Training Loss Comparison')

plt.legend()

plt.grid(True, alpha=0.3)

plt.yscale('log')

# Row 1: Decision boundaries for each model

models = [

(model_linear, 'Linear Model', plt.subplot(2, 3, 2)),

(model_twoneuron, '2 Hidden Units (Like relu.md)', plt.subplot(2, 3, 3))

]

for model, title, ax in models:

plt.sca(ax)

if hasattr(model, 'predict_batch'):

Z = model.predict_batch(mesh_norm).reshape(xx.shape)

else:

Z = model.forward(mesh_norm).reshape(xx.shape)

plt.contourf(xx, yy, Z, levels=20, cmap='RdBu', alpha=0.4)

plt.contour(xx, yy, Z, levels=[0.5], colors='g', linewidths=2)

plt.scatter(class0_X[:,0], class0_X[:,1], c='magenta', s=30,

edgecolor='purple', linewidth=1, alpha=0.7)

plt.scatter(class1_X[:,0], class1_X[:,1], c='gold', s=30,

edgecolor='orange', linewidth=1, alpha=0.7)

plt.title(title)

plt.grid(True, alpha=0.3)

# Row 2: Feature space visualization

plt.subplot(2, 3, 4)

# Visualize the 2-neuron hidden layer features

hidden_2neuron = model_twoneuron.relu(X_nonlinear_norm @ model_twoneuron.W1 + model_twoneuron.b1)

plt.scatter(hidden_2neuron[y_nonlinear==0, 0], hidden_2neuron[y_nonlinear==0, 1],

c='magenta', s=30, edgecolor='purple', linewidth=1, alpha=0.7, label='Class 0')

plt.scatter(hidden_2neuron[y_nonlinear==1, 0], hidden_2neuron[y_nonlinear==1, 1],

c='gold', s=30, edgecolor='orange', linewidth=1, alpha=0.7, label='Class 1')

plt.xlabel('Hidden Unit 1')

plt.ylabel('Hidden Unit 2')

plt.title('2-Neuron Feature Space (After ReLU)')

plt.legend()

plt.grid(True, alpha=0.3)

plt.subplot(2, 3, 5)

Z_optimized = model_optimized.forward(mesh_norm).reshape(xx.shape)

plt.contourf(xx, yy, Z_optimized, levels=20, cmap='RdBu', alpha=0.4)

plt.contour(xx, yy, Z_optimized, levels=[0.5], colors='g', linewidths=2)

plt.scatter(class0_X[:,0], class0_X[:,1], c='magenta', s=30,

edgecolor='purple', linewidth=1, alpha=0.7)

plt.scatter(class1_X[:,0], class1_X[:,1], c='gold', s=30,

edgecolor='orange', linewidth=1, alpha=0.7)

plt.title('4 Hidden Units')

plt.grid(True, alpha=0.3)

# Row 2: Accuracy comparison

plt.subplot(2, 3, 6)

linear_acc = np.mean((model_linear.predict_batch(X_nonlinear_norm) > 0.5) == y_nonlinear)

twoneuron_acc = np.mean((model_twoneuron.forward(X_nonlinear_norm).flatten() > 0.5) == y_nonlinear)

optimized_acc = np.mean((model_optimized.forward(X_nonlinear_norm).flatten() > 0.5) == y_nonlinear)

bars = plt.bar(['Linear', '2 Hidden', '4 Hidden'],

[linear_acc, twoneuron_acc, optimized_acc],

color=['blue', 'green', 'red'], alpha=0.7)

plt.ylabel('Accuracy')

plt.title('Final Accuracy Comparison')

plt.ylim(0, 1.1)

for bar, acc in zip(bars, [linear_acc, twoneuron_acc, optimized_acc]):

plt.text(bar.get_x() + bar.get_width()/2, bar.get_height() + 0.02,

f'{acc:.1%}', ha='center', fontsize=12)

plt.grid(True, alpha=0.3, axis='y')

plt.suptitle('ReLU Activation Enables Non-linear Classification', fontsize=14, fontweight='bold')

plt.tight_layout()

plt.show()

print(f"Linear model: {linear_acc:.1%} accuracy")

print(f"2-neuron model: {twoneuron_acc:.1%} accuracy")

print(f"4-neuron model: {optimized_acc:.1%} accuracy")

print(f"\nFinal losses - Linear: {losses_linear[-1]:.4f}, 2-neuron: {losses_twoneuron[-1]:.4f}, 4-neuron: {losses_optimized[-1]:.4f}")

Linear model: 87.1% accuracy 2-neuron model: 100.0% accuracy 4-neuron model: 100.0% accuracy Final losses - Linear: 0.0520, 2-neuron: 0.0264, 4-neuron: 0.0065

Backpropagation with Activation Functions¶

To handle non-linear patterns, we add an activation function $g$: $$\hat{y} = g(\mathbf{w}^T\mathbf{x} + b)$$

Common choices:

- Sigmoid: $\sigma(z) = \frac{1}{1 + e^{-z}}$ — Outputs in (0,1)

- ReLU: $\text{ReLU}(z) = \max(0, z)$ — Simple and efficient

- Tanh: $\tanh(z) = \frac{e^z - e^{-z}}{e^z + e^{-z}}$ — Outputs in (-1,1)

With activation function $g$, the forward pass becomes:

- Linear: $z = \mathbf{w}^T\mathbf{x} + b$

- Activation: $\hat{y} = g(z)$

For backpropagation, we use the chain rule. Given loss $L = \frac{1}{2}(y - \hat{y})^2$:

Step 1: Error at output $$\delta = \frac{\partial L}{\partial \hat{y}} = -(y - \hat{y})$$

Step 2: Error before activation $$\frac{\partial L}{\partial z} = \frac{\partial L}{\partial \hat{y}} \cdot \frac{\partial \hat{y}}{\partial z} = \delta \cdot g'(z)$$

Step 3: Gradients $$\frac{\partial L}{\partial \mathbf{w}} = \frac{\partial L}{\partial z} \cdot \frac{\partial z}{\partial \mathbf{w}} = \delta \cdot g'(z) \cdot \mathbf{x}$$ $$\frac{\partial L}{\partial b} = \delta \cdot g'(z)$$

This is what the backward method implements:

def backward(self, y_true):

error = self.a - y_true # δ = ŷ - y

dz = error * self.g_prime(self.z) # δ * g'(z)

self.w -= self.lr * dz * self.x # w -= η * ∂L/∂w

self.b -= self.lr * dz # b -= η * ∂L/∂b

def sigmoid(z):

return 1 / (1 + np.exp(-np.clip(z, -500, 500)))

def sigmoid_prime(z):

s = sigmoid(z)

return s * (1 - s)

# Generate data

z = np.linspace(-10, 10, 1000)

sigmoid_vals = sigmoid(z)

gradient_vals = sigmoid_prime(z)

# Create plot

plt.figure(figsize=(10, 6))

plt.plot(z, sigmoid_vals, 'b-', linewidth=2, label='Sigmoid σ(z)')

plt.plot(z, gradient_vals, 'r-', linewidth=2, label="Sigmoid Gradient σ'(z)")

plt.xlabel('Input (z)')

plt.ylabel('Output')

plt.title('Sigmoid Function and Its Gradient')

plt.grid(True, alpha=0.3)

plt.legend()

plt.xlim(-10, 10)

plt.ylim(0, 1)

plt.show()

class Perceptron:

def __init__(self, dim, lr=0.01):

self.w = np.random.randn(dim) * 0.01

self.b = 0.0

self.lr = lr

def forward(self, x):

self.x = x

self.z = np.dot(x, self.w) + self.b

self.a = sigmoid(self.z)

return self.a

def backward(self, y_true):

# Compute gradients using chain rule

error = self.a - y_true

dz = error * sigmoid_prime(self.z)

# Update parameters

self.w -= self.lr * dz * self.x

self.b -= self.lr * dz

return 0.5 * error**2

The Critical Role of Nonlinearity¶

Why do we need the activation function $g$? What happens if we just use a linear function, like $g(z) = z$?

Consider a network with multiple layers, but no non-linear activation functions between them. The output of one layer is just a linear transformation of its input. If we stack these linear layers:

Let the first layer be $h_1 = W_1 x + b_1$. Let the second layer be $h_2 = W_2 h_1 + b_2$.

Substituting the first into the second: $$h_2 = W_2 (W_1 x + b_1) + b_2$$ $$h_2 = W_2 W_1 x + W_2 b_1 + b_2$$

This can be rewritten as: $$h_2 = (W_2 W_1) x + (W_2 b_1 + b_2)$$

Let $W_{eq} = W_2 W_1$ and $b_{eq} = W_2 b_1 + b_2$. Then: $$h_2 = W_{eq} x + b_{eq}$$

This is just another linear transformation! No matter how many linear layers we stack, the entire network will only be able to compute a single linear function of the input. A linear network can only learn:

Linear network can only learn: y = mx + b (a straight line in 1D) But sin(πx) is curved - impossible with just linear transformations!

To approximate complex, non-linear functions like $\sin(\pi x)$, we must introduce non-linearity using activation functions between the layers.

# Demonstrate Linear Network Failure

class LinearNetwork(nn.Module):

"""A simple network with only linear layers (no activation)"""

def __init__(self, width):

super().__init__()

self.layers = nn.Sequential(

nn.Linear(1, width),

nn.Linear(width, 1) # Final linear layer

)

def forward(self, x):

return self.layers(x)

# Prepare training data as tensors (using both naming conventions for compatibility)

x_train_tensor = torch.FloatTensor(x_train.reshape(-1, 1))

y_train_tensor = torch.FloatTensor(y_train.reshape(-1, 1))

u_train_tensor = y_train_tensor # Alias for compatibility with later cells

# Also create test tensors for evaluation

x_test_tensor = torch.FloatTensor(x_test.reshape(-1, 1))

y_test_tensor = torch.FloatTensor(y_exact.reshape(-1, 1))

u_test_tensor = y_test_tensor # Alias for compatibility

# Create a simple linear model (no hidden layer, just for comparison)

linear_model = nn.Linear(1, 1)

criterion = nn.MSELoss()

optimizer = optim.Adam(linear_model.parameters(), lr=0.01)

epochs = 3000

for epoch in range(epochs):

predictions = linear_model(x_train_tensor)

loss = criterion(predictions, y_train_tensor)

optimizer.zero_grad()

loss.backward()

optimizer.step()

if epoch % 500 == 0:

print(f"Epoch {epoch}: Loss = {loss.item():.6f}")

# Test linear model

linear_pred = linear_model(x_test_tensor).detach().numpy().flatten()

# Compare linear vs nonlinear data

plt.figure(figsize=(10, 5))

plt.plot(x_test, y_exact, 'b-', linewidth=2, label='True (Nonlinear)')

plt.plot(x_test, linear_pred, 'r--', linewidth=2, label='Linear Model')

plt.scatter(x_train, y_train, s=50, c='black', zorder=5, alpha=0.5, label='Training Data')

plt.xlabel('x')

plt.ylabel('u(x)')

plt.title('Linear Model Fails on Nonlinear Data')

plt.legend()

plt.grid(True, alpha=0.3)

plt.show()

print(f"Linear model can only fit a straight line to nonlinear data!")

print(f"Final MSE: {np.mean((linear_pred - y_exact)**2):.4f}")

Epoch 0: Loss = 3.436656 Epoch 500: Loss = 0.124569 Epoch 1000: Loss = 0.109571 Epoch 1500: Loss = 0.108992 Epoch 2000: Loss = 0.108988 Epoch 2500: Loss = 0.108988

Linear model can only fit a straight line to nonlinear data! Final MSE: 0.0998

Nonlinear Activation Functions¶

Activation functions are applied element-wise to the output of a linear transformation within a neuron or layer. They introduce the non-linearity required for neural networks to learn complex mappings.

Some common activation functions include:

Sigmoid Squashes input to (0, 1). Useful for binary classification output. Can suffer from vanishing gradients. $$ \sigma(x) = \frac{1}{1 + e^{-x}} $$

Tanh Squashes input to (-1, 1). Zero-centered, often preferred over Sigmoid for hidden layers. Can also suffer from vanishing gradients. $$\tanh(x) = \frac{e^x - e^{-x}}{e^x + e^{-x}} $$

ReLU (Rectified Linear Unit) Outputs input directly if positive, zero otherwise. Computationally efficient, helps mitigate vanishing gradients for positive inputs. Can suffer from "dead neurons" if inputs are always negative. $$ f(x) = \max(0, x)$$

LeakyReLU Similar to ReLU but allows a small gradient for negative inputs, preventing dead neurons. $$f(x) = \max(\alpha x, x) \quad (\alpha \text{ is a small positive constant, e.g., 0.01})$$

import numpy as np

import matplotlib.pyplot as plt

# --- 1. Parameterized Activation Functions ---

def sigmoid(x, a=1.0):

"""

Parameterized Sigmoid activation function.

'a' controls the steepness.

"""

return 1 / (1 + np.exp(-a * x))

def tanh(x, a=1.0):

"""

Parameterized Hyperbolic Tangent activation function.

'a' controls the steepness.

"""

return np.tanh(a * x)

def relu(x):

"""

Rectified Linear Unit (ReLU) activation function.

It has no parameters.

"""

return np.maximum(0, x)

def leaky_relu(x, alpha=0.1):

"""

Parameterized Leaky ReLU activation function.

'alpha' is the slope for negative inputs.

"""

return np.maximum(alpha * x, x)

# --- 2. Setup for Plotting ---

# Input data range

x = np.linspace(-5, 5, 200)

# Create a 2x2 subplot grid

fig, axs = plt.subplots(2, 2, figsize=(12, 10))

fig.suptitle('Common Activation Functions (Parameterized)', fontsize=16, fontweight='bold')

# --- 3. Plotting each function on its subplot ---

# Sigmoid Plot (Top-Left)

axs[0, 0].plot(x, sigmoid(x, a=1), label='a=1 (Standard)')

axs[0, 0].plot(x, sigmoid(x, a=2), label='a=2 (Steeper)', linestyle='--')

axs[0, 0].plot(x, sigmoid(x, a=0.5), label='a=0.5 (Less Steep)', linestyle=':')

axs[0, 0].set_title('Sigmoid Function')

axs[0, 0].legend()

# Tanh Plot (Top-Right)

axs[0, 1].plot(x, tanh(x, a=1), label='a=1 (Standard)')

axs[0, 1].plot(x, tanh(x, a=2), label='a=2 (Steeper)', linestyle='--')

axs[0, 1].plot(x, tanh(x, a=0.5), label='a=0.5 (Less Steep)', linestyle=':')

axs[0, 1].set_title('Tanh Function')

axs[0, 1].legend()

# ReLU Plot (Bottom-Left)

axs[1, 0].plot(x, relu(x), label='ReLU')

axs[1, 0].set_title('ReLU Function')

axs[1, 0].legend()

# Leaky ReLU Plot (Bottom-Right)

axs[1, 1].plot(x, leaky_relu(x, alpha=0.1), label='α=0.1 (Standard)')

axs[1, 1].plot(x, leaky_relu(x, alpha=0.3), label='α=0.3', linestyle='--')

axs[1, 1].plot(x, leaky_relu(x, alpha=0.01), label='α=0.01', linestyle=':')

axs[1, 1].set_title('Leaky ReLU Function')

axs[1, 1].legend()

# --- 4. Final Touches for all subplots ---

# Apply common labels, grids, and axis lines to all subplots

for ax in axs.flat:

ax.set_xlabel('Input z')

ax.set_ylabel('Output g(z)')

ax.grid(True, which='both', linestyle='--', linewidth=0.5)

ax.axhline(y=0, color='k', linewidth=0.8)

ax.axvline(x=0, color='k', linewidth=0.8)

# Adjust layout to prevent titles and labels from overlapping

plt.tight_layout(rect=[0, 0.03, 1, 0.95])

# Display the plot

plt.show()

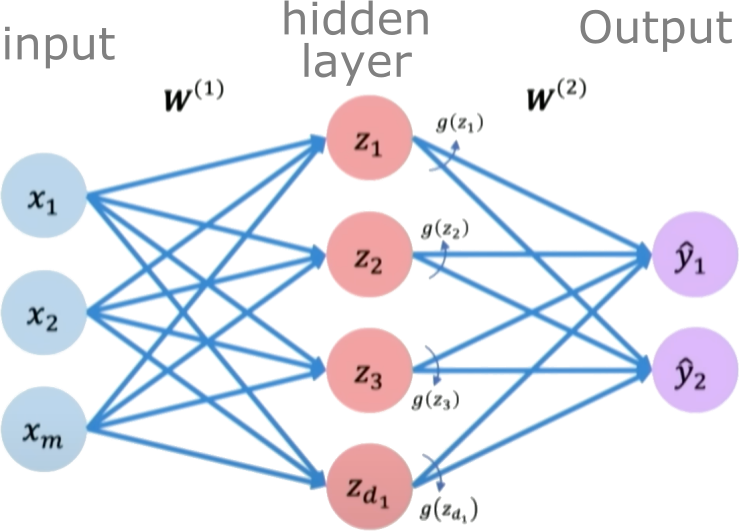

Building Capacity: The Single Hidden Layer Neural Network¶

A single perceptron is limited in the complexity of functions it can represent. To increase capacity, we combine multiple perceptrons into a layer. A single-layer feedforward neural network (also known as a shallow Multi-Layer Perceptron or MLP) consists of an input layer, one hidden layer of neurons, and an output layer.

For our 1D input $x$, a single-layer network with $N_h$ hidden neurons works as follows:

- Input Layer: Receives the input $x$.

- Hidden Layer: Each of the $N_h$ neurons in this layer performs a linear transformation on the input $x$ and applies a non-linear activation function $g$. The output of this layer is a vector $\boldsymbol{h}$ of size $N_h$.

- Pre-activation vector $\boldsymbol{z}^{(1)}$ (size $N_h$): $\boldsymbol{z}^{(1)} = W^{(1)}\boldsymbol{x} + \boldsymbol{b}^{(1)}$ (Here, $W^{(1)}$ is a $N_h \times 1$ weight matrix, $\boldsymbol{x}$ is treated as a $1 \times 1$ vector, and $\boldsymbol{b}^{(1)}$ is a $N_h \times 1$ bias vector).

- Activation vector $\boldsymbol{h}$ (size $N_h$): $\boldsymbol{h} = g(\boldsymbol{z}^{(1)})$ (where $g$ is applied element-wise).

- Output Layer: This layer takes the vector $\boldsymbol{h}$ from the hidden layer and performs another linear transformation to produce the final scalar output $\hat{y}$. For regression, the output layer typically has a linear activation (or no activation function explicitly applied after the linear transformation).

- Pre-activation scalar $z^{(2)}$: $z^{(2)} = W^{(2)}\boldsymbol{h} + b^{(2)}$ (Here, $W^{(2)}$ is a $1 \\times N_h$ weight matrix, and $b^{(2)}$ is a scalar bias).

- Final output $\hat{y}$: $\hat{y} = z^{(2)}$

Credits: Alexander Amini, MIT

# Implement Single-Layer NN in PyTorch

class SingleLayerNN(nn.Module):

"""Single hidden layer neural network for 1D input/output"""

def __init__(self, hidden_size=10):

super(SingleLayerNN, self).__init__()

# Input layer (1D) to Hidden layer (hidden_size)

self.hidden = nn.Linear(1, hidden_size)

# Hidden layer (hidden_size) to Output layer (1D)

self.output = nn.Linear(hidden_size, 1)

# Choose activation function for the hidden layer

self.activation = nn.Tanh()

def forward(self, x):

# Pass through hidden layer and apply activation

x = self.hidden(x)

x = self.activation(x)

# Pass through output layer (linear output)

x = self.output(x)

return x

Let's try to plot before training

# Define variables for compatibility with legacy code

x_plot = x_test # Use the test grid we already defined

u_analytical_plot = y_exact # The true function values

x_train_np = x_train # Training x values

u_train_noisy_np = y_train # Training y values with noise

# Define SingleLayerNN if not already defined

class SingleLayerNN(nn.Module):

"""Single hidden layer neural network for 1D input/output"""

def __init__(self, hidden_size=10):

super(SingleLayerNN, self).__init__()

self.hidden = nn.Linear(1, hidden_size)

self.output = nn.Linear(hidden_size, 1)

def forward(self, x):

x = torch.sigmoid(self.hidden(x))

return self.output(x)

model = SingleLayerNN(10)

# Visualize the untrained network's prediction

x_test_tensor_plot = torch.tensor(x_plot.reshape(-1, 1), dtype=torch.float32)

with torch.no_grad():

u_pred_linear = model(x_test_tensor_plot).numpy().flatten()

fig, ax = plt.subplots(1, 1, figsize=(10, 6))

ax.plot(x_plot, u_analytical_plot, 'b-', linewidth=3, label='True Function: $\\sin(\\pi x)$', alpha=0.7)

ax.plot(x_plot, u_pred_linear, 'r--', linewidth=2, label='Untrained NN (Random Weights)')

ax.scatter(x_train_np, u_train_noisy_np, color='k', s=40, alpha=0.7, label='Training Data', zorder=5)

ax.set_xlabel('x', fontsize=12)

ax.set_ylabel('u(x)', fontsize=12)

ax.set_title('Untrained Neural Network with Random Initialization', fontsize=14, fontweight='bold')

ax.legend(fontsize=11, loc='best')

ax.grid(True, alpha=0.3)

plt.tight_layout()

plt.show()

print("Note: The untrained network produces random output due to random weight initialization.")

Note: The untrained network produces random output due to random weight initialization.

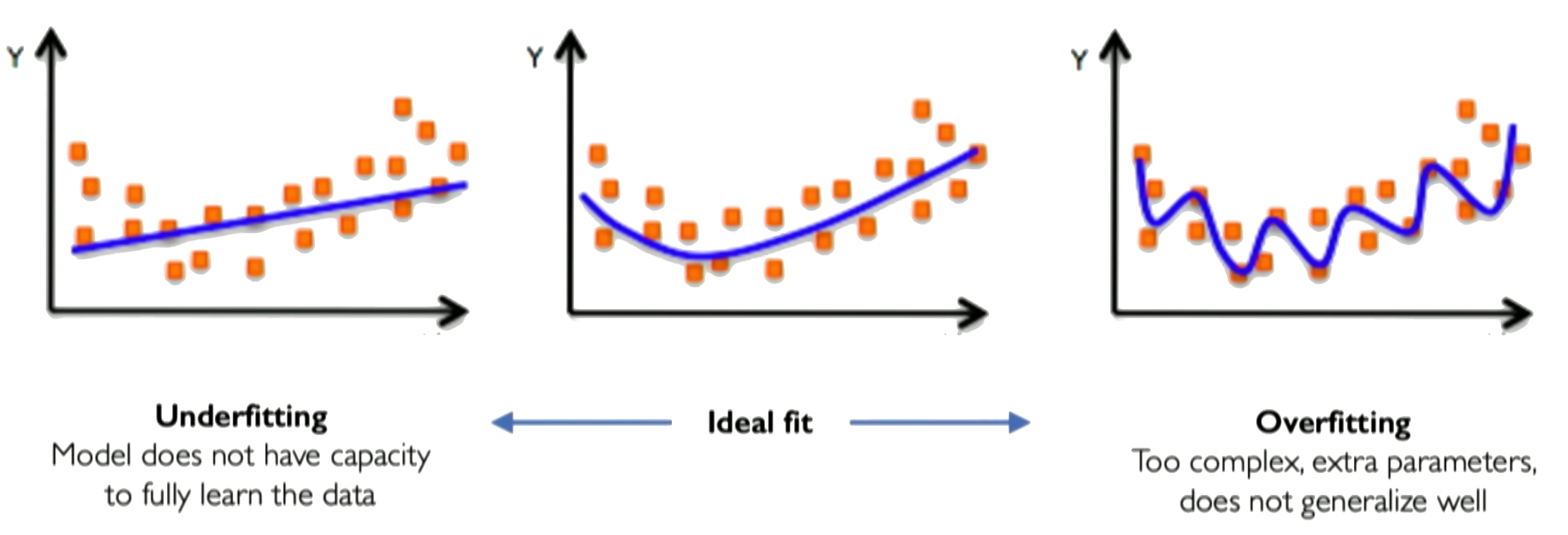

Training a neural network¶

Neural networks are trained using an optimization algorithm that iteratively updates the network's weights and biases to minimize a loss function. The loss function measures how far the network's predictions are from the true target outputs in the training data. It is a measure of the model's error.

We quantify this difference using a Loss Function, Some common loss functions include:

Mean squared error (MSE) - The average of the squared differences between the predicted and actual values. Measures the square of the error. Used for regression problems.

Cross-entropy loss - Measures the divergence between the predicted class probabilities and the true distribution. Used for classification problems. Penalizes confident incorrect predictions.

Hinge loss - Used for Support Vector Machines classifiers. Penalizes predictions that are on the wrong side of the decision boundary.

For our function approximation (regression) task, the Mean Squared Error (MSE) is a common choice:

$$\mathcal{L}(\theta) = \frac{1}{N} \sum_{i=1}^N \left(u_{NN}(x_i; \theta) - u_i\right)^2$$

Minimizing this loss function with respect to the parameters $\theta$ is an optimization problem.

Loss optimization is the process of finding the network weights that acheives the lowest loss.

$$ \begin{align} \boldsymbol{w^*} &= \argmin_{\boldsymbol{w}}\frac{1}{n}\sum_{i=1}^n \mathcal{L}(f(x^{(i)};\boldsymbol{w}),y^{(i)})\\ \boldsymbol{w^*} &= \argmin_{\boldsymbol{w}} J(\boldsymbol{w}) \end{align} $$

The training process works like this:

Initialization: The weights and biases of the network are initialized, often with small random numbers.

Forward Pass: The input is passed through the network, layer by layer, applying the necessary transformations (e.g., linear combinations of weights and inputs followed by activation functions) until an output is obtained.

Calculate Loss: A loss function is used to quantify the difference between the predicted output and the actual target values.

Backward Pass (Backpropagation): The gradients of the loss with respect to the parameters (weights and biases) are computed using the chain rule for derivatives. This process is known as backpropagation.

Update Parameters: The gradients computed in the backward pass are used to update the parameters of the network, typically using optimization algorithms like stochastic gradient descent (SGD) or more sophisticated ones like Adam. The update is done in the direction that minimizes the loss.

Repeat: Steps 2-5 are repeated using the next batch of data until a stopping criterion is met, such as a set number of epochs (full passes through the training dataset) or convergence to a minimum loss value.

Validation: The model is evaluated on a separate validation set to assess its generalization to unseen data.

The goal of training is to find the optimal set of weights and biases $\theta^*$ for the network that minimize the difference between the network's output $u_{NN}(x; \theta)$ and the true training data $u_{train}$.

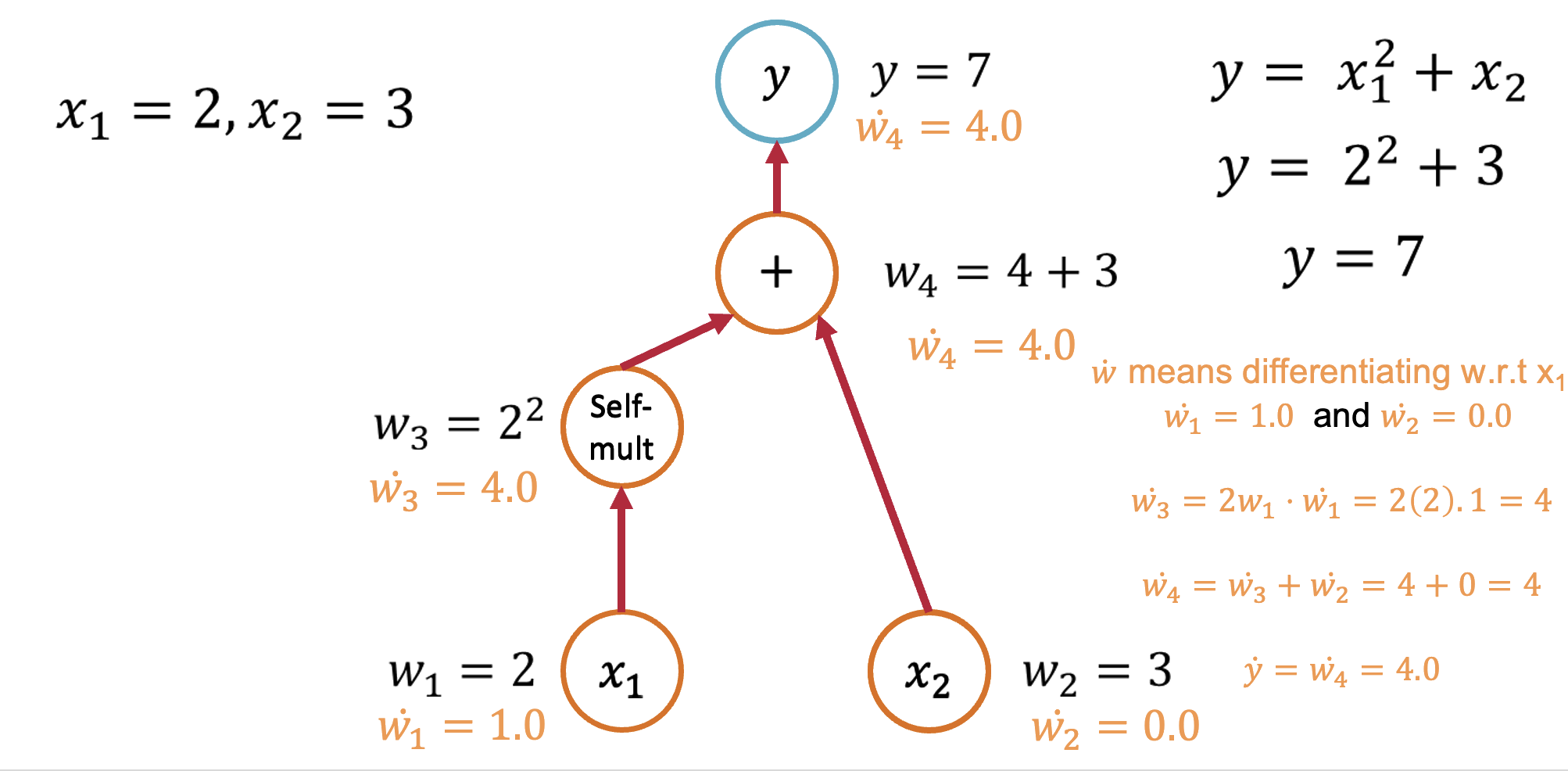

Computing gradients with Automatic Differentiation¶

The Core Insight: Functions Are Computational Graphs

Every computer program that evaluates a mathematical function can be viewed as a computational graph. Consider this simple function:

def f(x1, x2):

y = x1**2 + x2

return y

This creates a computational graph where each operation is a node. This decomposition is the key insight that makes automatic differentiation possible.

Forward Mode Automatic Differentiation¶

Forward mode AD computes derivatives by propagating derivative information forward through the computational graph, following the same path as the function evaluation.

Forward Mode: Computing $\frac{\partial y}{\partial x_1}$¶

Starting with our function $y = x_1^2 + x_2$, let's trace through the computation:

Seed the input: Set $\dot{x}_1 = 1$ and $\dot{x}_2 = 0$ (we're differentiating w.r.t. $x_1$)

Forward propagation:

- $v_1 = x_1^2$, so $\dot{v}_1 = 2x_1 \cdot \dot{x}_1 = 2x_1 \cdot 1 = 2x_1$

- $y = v_1 + x_2$, so $\dot{y} = \dot{v}_1 + \dot{x}_2 = 2x_1 + 0 = 2x_1$

Result: $\frac{\partial y}{\partial x_1} = 2x_1$

Forward Mode: Computing $\frac{\partial y}{\partial x_2}$¶

To get the derivative w.r.t. $x_2$, we seed differently:

Seed the input: Set $\dot{x}_1 = 0$ and $\dot{x}_2 = 1$

Forward propagation:

- $v_1 = x_1^2$, so $\dot{v}_1 = 2x_1 \cdot \dot{x}_1 = 2x_1 \cdot 0 = 0$

- $y = v_1 + x_2$, so $\dot{y} = \dot{v}_1 + \dot{x}_2 = 0 + 1 = 1$

Result: $\frac{\partial y}{\partial x_2} = 1$

Key insight: Forward mode requires one pass per input variable to compute all partial derivatives.

Reverse Mode Automatic Differentiation¶

Reverse mode AD (also called backpropagation) computes derivatives by propagating derivative information backward through the computational graph.

The Backward Pass Algorithm¶

- Forward pass: Compute function values and store intermediate results

- Seed the output: Set $\bar{y} = 1$ (derivative of output w.r.t. itself)

- Backward pass: Use the chain rule to propagate derivatives backward

Computing All Partial Derivatives in One Pass¶

The beauty of reverse mode is that it computes all partial derivatives in a single backward pass:

Forward pass: $y = x_1^2 + x_2$ (store intermediate values)

Backward pass with $\bar{y} = 1$:

- $\frac{\partial y}{\partial x_1} = \frac{\partial y}{\partial v_1} \cdot \frac{\partial v_1}{\partial x_1} = 1 \cdot 2x_1 = 2x_1$

- $\frac{\partial y}{\partial x_2} = \frac{\partial y}{\partial x_2} = 1$

Key insight: Reverse mode computes gradients w.r.t. all inputs in a single backward pass!

AD: The Mathematical Foundation¶

Automatic differentiation works because of a fundamental theorem:

Chain Rule: For composite functions $f(g(x))$: $$\frac{d}{dx}f(g(x)) = f'(g(x)) \cdot g'(x)$$

By systematically applying the chain rule to each operation in a computational graph, AD can compute exact derivatives for arbitrarily complex functions.

Automatic Differentiation in Practice: PyTorch¶

Let's see how automatic differentiation works in PyTorch:

import torch

# Define variables that require gradients

x1 = torch.tensor(2.0, requires_grad=True)

x2 = torch.tensor(3.0, requires_grad=True)

# Define the function

y = x1**2 + x2

# Compute gradients using reverse mode AD

y.backward()

# Access the computed gradients

print(f"dy/dx1: {x1.grad.item()}") # Should be 2*x1 = 4.0

print(f"dy/dx2: {x2.grad.item()}") # Should be 1.0

dy/dx1: 4.0 dy/dx2: 1.0

A More Complex Example: Neural Network¶

import torch

import torch.nn as nn

# Implement Single-Layer NN in PyTorch

class SingleLayerNN(nn.Module):

"""Single hidden layer neural network for 1D input/output"""

def __init__(self, hidden_size=10):

super(SingleLayerNN, self).__init__()

self.hidden = nn.Linear(1, hidden_size)

self.output = nn.Linear(hidden_size, 1)

self.activation = nn.Tanh()

def forward(self, x):

# Forward pass

x = self.hidden(x)

x = self.activation(x)

x = self.output(x)

return x

# Create network and data

model = SingleLayerNN(hidden_size=10)

# Define MSE loss

criterion = nn.MSELoss()

# Forward pass: compute predictions

predictions = model(x_train_tensor)

# Calculate loss (((output - target)**2).mean())

loss = criterion(predictions, u_train_tensor)

# Backward pass: compute gradients

loss.backward() # Compute gradients of the loss w.r.t. parameters

# Access gradients

for name, param in model.named_parameters():

print(f"{name}: gradient shape {param.grad.shape}")

hidden.weight: gradient shape torch.Size([10, 1]) hidden.bias: gradient shape torch.Size([10]) output.weight: gradient shape torch.Size([1, 10]) output.bias: gradient shape torch.Size([1])

When to Use Forward vs Reverse Mode¶

The choice depends on the structure of your problem:

- Forward Mode: Efficient when few inputs, many outputs (e.g., $f: \mathbb{R}^n \to \mathbb{R}^m$ with $n \ll m$)

- Reverse Mode: Efficient when many inputs, few outputs (e.g., $f: \mathbb{R}^n \to \mathbb{R}^m$ with $n \gg m$)

In machine learning, we typically have millions of parameters (inputs) and a single loss function (output), making reverse mode the natural choice.

Computational Considerations¶

Memory vs Computation Trade-offs¶

Forward Mode:

- Memory: O(1) additional storage

- Computation: O(n) for n input variables

Reverse Mode:

- Memory: O(computation graph size)

- Computation: O(1) for any number of input variables

Modern Optimizations¶

- Checkpointing: Trade computation for memory by recomputing intermediate values

- JIT compilation: Compile computational graphs for faster execution

- Parallelization: Distribute gradient computation across multiple devices

Gradient Descent¶

Gradient Descent is a first-order iterative optimization algorithm used to find the minimum of a differentiable function. In the context of training a neural network, we are trying to minimize the loss function.

- Initialize Parameters:

Choose an initial point (i.e., initial values for the weights and biases) in the parameter space, and set a learning rate that determines the step size in each iteration.

- Compute the Gradient:

Calculate the gradient of the loss function with respect to the parameters at the current point. The gradient is a vector that points in the direction of the steepest increase of the function. It is obtained by taking the partial derivatives of the loss function with respect to each parameter.

- Update Parameters:

Move in the opposite direction of the gradient by a distance proportional to the learning rate. This is done by subtracting the gradient times the learning rate from the current parameters:

$$\boldsymbol{w} = \boldsymbol{w} - \eta \nabla J(\boldsymbol{w})$$

Here, $\boldsymbol{w}$ represents the parameters, $\eta$ is the learning rate, and $\nabla J (\boldsymbol{w})$ is the gradient of the loss function $J$ with respect to $\boldsymbol{w}$.

- Repeat:

Repeat steps 2 and 3 until the change in the loss function falls below a predefined threshold, or a maximum number of iterations is reached.

Algorithm:¶

- Initialize weights randomly $\sim \mathcal{N}(0, \sigma^2)$

- Loop until convergence

- Compute gradient, $\frac{\partial J(\boldsymbol{w})}{\partial \boldsymbol{w}}$

- Update weights, $\boldsymbol{w} \leftarrow \boldsymbol{w} - \eta \frac{\partial J(\boldsymbol{w})}{\partial \boldsymbol{w}}$

- Return weights

Assuming a loss function is mean squared error (MSE). Let's compute the gradient of the loss with respect to the input weights.

The loss function is mean squared error:

$$\text{loss} = \frac{1}{n}\sum_{i=1}^{n}(y_i - \hat{y}_i)^2$$

Where $y_i$ are the true target and $\hat{y}_i$ are the predicted values.

To minimize this loss, we need to compute the gradients with respect to the weights $\mathbf{w}$ and bias $b$:

Using the chain rule, the gradient of the loss with respect to the weights is: $$\frac{\partial \text{loss}}{\partial \mathbf{w}} = \frac{2}{n}\sum_{i=1}^{n}(y_i - \hat{y}_i) \frac{\partial y_i}{\partial \mathbf{w}}$$

The term inside the sum is the gradient of the loss with respect to the output $y_i$, which we called $\text{grad\_output}$: $$\text{grad\_output} = \frac{2}{n}\sum_{i=1}^{n}(y_i - \hat{y}_i)$$

The derivative $\frac{\partial y_i}{\partial \mathbf{w}}$ is just the input $\mathbf{x}_i$ multiplied by the derivative of the activation. For simplicity, let's assume linear activation, so this is just $\mathbf{x}_i$:

$$\therefore \frac{\partial \text{loss}}{\partial \mathbf{w}} = \mathbf{X}^T\text{grad\_output}$$

The gradient for the bias is simpler: $$\frac{\partial \text{loss}}{\partial b} = \sum_{i=1}^{n}\text{grad\_output}_i$$

Finally, we update the weights and bias by gradient descent:

$$\mathbf{w} = \mathbf{w} - \eta \frac{\partial \text{loss}}{\partial \mathbf{w}}$$

$$b = b - \eta \frac{\partial \text{loss}}{\partial b}$$

Where $\eta$ is the learning rate.

Variants:¶

There are several variants of Gradient Descent that modify or enhance these basic steps, including:

Stochastic Gradient Descent (SGD): Instead of using the entire dataset to compute the gradient, SGD uses a single random data point (or small batch) at each iteration. This adds noise to the gradient but often speeds up convergence and can escape local minima.

Momentum: Momentum methods use a moving average of past gradients to dampen oscillations and accelerate convergence, especially in cases where the loss surface has steep valleys.

Adaptive Learning Rate Methods: Techniques like Adagrad, RMSprop, and Adam adjust the learning rate individually for each parameter, often leading to faster convergence.

Limitations:¶

- It may converge to a local minimum instead of a global minimum if the loss surface is not convex.

- Convergence can be slow if the learning rate is not properly tuned.

- Sensitive to the scaling of features; poorly scaled data can cause the gradient descent to take a long time to converge or even diverge.

Effect of learning rate¶

The learning rate in gradient descent is a critical hyperparameter that can significantly influence the model's training dynamics. Let us now look at how the learning rate affects local minima, overshooting, and convergence:

- Effect on Local Minima:

High Learning Rate: A large learning rate can help the model escape shallow local minima, leading to the discovery of deeper (potentially global) minima. However, it can also cause instability, making it hard to settle in a good solution.

Low Learning Rate: A small learning rate may cause the model to get stuck in local minima, especially in complex loss landscapes with many shallow valleys. The model can lack the "energy" to escape these regions.

- Effect on Overshooting:

High Learning Rate: If the learning rate is set too high, the updates may be so large that they overshoot the minimum and cause the algorithm to diverge, or oscillate back and forth across the valley without ever reaching the bottom. This oscillation can be detrimental to convergence.

Low Learning Rate: A very low learning rate will likely avoid overshooting but may lead to extremely slow convergence, as the updates to the parameters will be minimal. It might result in getting stuck in plateau regions where the gradient is small.

- Effect on Convergence:

High Learning Rate: While it can speed up convergence initially, a too-large learning rate risks instability and divergence, as mentioned above. The model may never converge to a satisfactory solution.

Low Learning Rate: A small learning rate ensures more stable and reliable convergence but can significantly slow down the process. If set too low, it may also lead to premature convergence to a suboptimal solution.

Finding the Right Balance:¶

Choosing the right learning rate is often a trial-and-error process, sometimes guided by techniques like learning rate schedules or adaptive learning rate algorithms like Adam. These approaches attempt to balance the trade-offs by adjusting the learning rate throughout training, often starting with larger values to escape local minima and avoid plateaus, then reducing it to stabilize convergence.

# Implement PyTorch Training Loop Function

def train_network(model, x_train, u_train, epochs=5000, lr=0.01):

"""Train a neural network model using MSE loss and Adam optimizer"""

criterion = nn.MSELoss() # Mean Squared Error Loss

optimizer = optim.Adam(model.parameters(), lr=lr) # Adam optimizer

losses = []

for epoch in range(epochs):

# Forward pass: compute predictions

predictions = model(x_train)

# Calculate loss

loss = criterion(predictions, u_train)

# Backward pass: compute gradients

optimizer.zero_grad() # Clear previous gradients

loss.backward() # Compute gradients of the loss w.r.t. parameters

# Optimizer step: update parameters

optimizer.step() # Perform a single optimization step

losses.append(loss.item())

# Optional: Print loss periodically

# if (epoch + 1) % 1000 == 0: